Speed Up Your APIs: Exploring Caching Strategies for Enhanced Performance

Posted by: Deepak | July 18, 2024

Categories: API caching, Performance optimization, Backend server

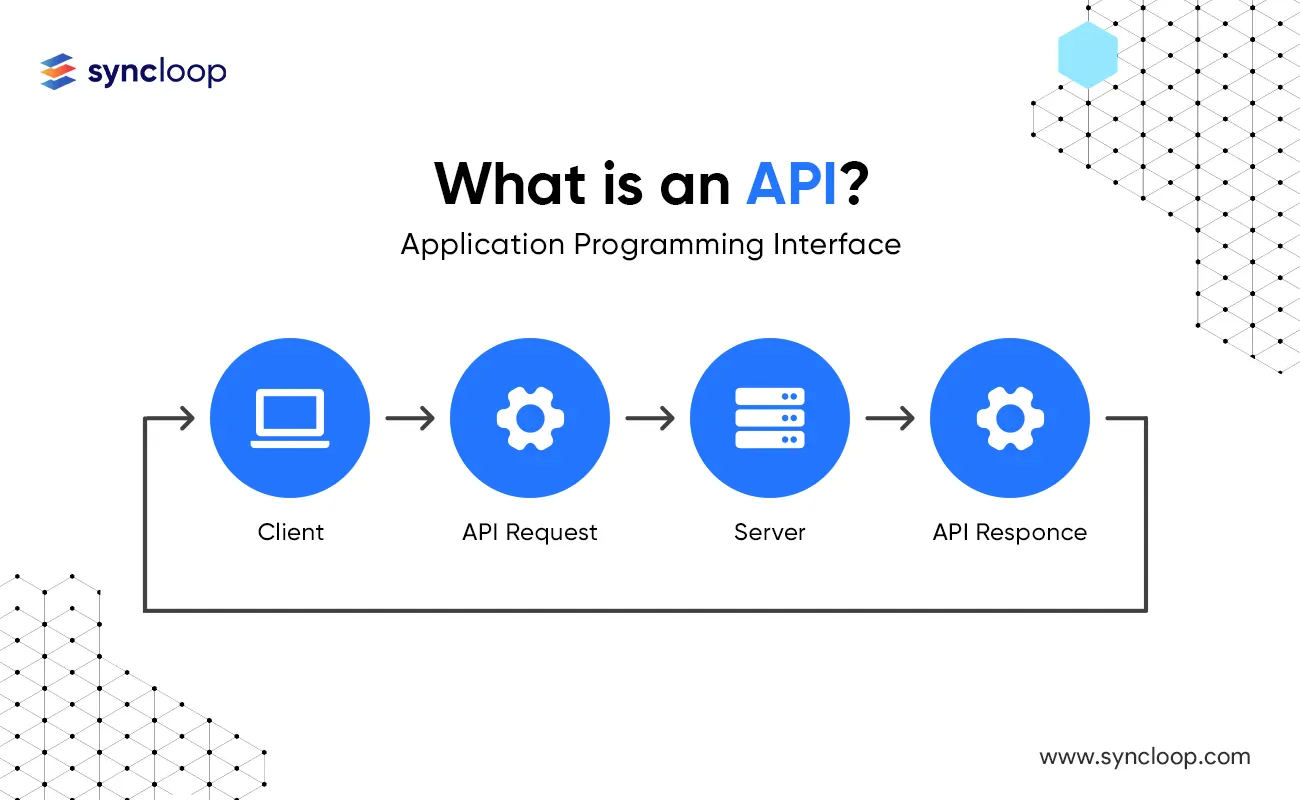

In today's fast-paced digital world, API (Application Programming Interface) performance is paramount. Slow API response times can lead to frustrated users and hinder application responsiveness. Caching strategies offer a powerful technique to optimize API performance by storing frequently accessed data, reducing the load on your backend servers, and delivering faster responses. This blog delves into the core concepts of API caching, explores various caching strategies, and discusses their benefits, implementations, and considerations.

Why API Caching Matters

Statistics highlight the impact of API performance on user experience:

- A study by Akamai reveals that a 100-millisecond delay in response time can reduce conversion rates by 7%.

API caching offers numerous benefits:

- Improved Response Times: By serving cached data, APIs can respond to requests significantly faster, enhancing user experience and application responsiveness.

- Reduced Server Load: Caching alleviates pressure on your backend servers by handling frequently accessed data without requiring database queries.

- Increased Scalability: Caching enables your API infrastructure to handle higher traffic volumes without compromising performance.

- Cost Optimization: Reduced server load translates to potential cost savings on server resources.

What is API Caching?

API caching involves storing a copy of frequently accessed API responses in a temporary location, typically closer to the client requesting the data. This cached data can then be served to subsequent requests without requiring the backend server to re-execute the original API logic or database queries.

Here are some key aspects of API caching:

- Cache Invalidation: Strategies are required to ensure cached data remains fresh and reflects updates to the original data source.

- Cache Expiration: Cached data can have expiration times to control how long it remains valid before being refreshed.

- Cache Hit vs. Cache Miss: A cache hit occurs when the requested data is found in the cache. A cache miss occurs when the data is not present in the cache and needs to be fetched from the backend server.

Common API Caching Strategies

There are several caching strategies tailored to different use cases:

- Browser Caching: Web browsers can cache static API responses (e.g., images, CSS files) to improve subsequent page load times. This reduces the need for the browser to download the same resources repeatedly.

- Client-Side Caching: Applications can implement client-side caching to store frequently accessed API responses locally on the user's device. This can be particularly beneficial for mobile applications with limited network bandwidth.

- Server-Side Caching: API servers can implement caching mechanisms to store frequently accessed data in memory or on a dedicated caching server. This approach offers more centralized control over cache invalidation and expiration policies.

- Cache-Aside Pattern: This strategy retrieves data from the cache first. If not found, the data is fetched from the backend server, stored in the cache, and then returned to the client. This pattern is suitable for read-heavy APIs.

- Write-Through Pattern: This approach updates both the cache and the backend data source simultaneously upon receiving a write request. While offering data consistency, it can introduce performance overhead for write operations.

Integrating Caching Strategies Across Industries

Here's how API caching can be applied in specific industries:

- E-commerce: E-commerce platforms can leverage browser caching for static content like product images or category descriptions. Server-side caching can be implemented for frequently accessed product details or shopping cart information to improve response times for returning users.

- Social Media: Social media platforms can utilize browser caching for static assets like profile pictures or logos. Client-side caching can be implemented for user feeds or frequently viewed content. Server-side caching can be used for popular hashtags or trending topics to reduce database load.

- FinTech: Financial institutions can leverage server-side caching for frequently accessed account balances or market data. Cache invalidation strategies become crucial to ensure data accuracy for sensitive financial information.

Benefits and Use Cases Across Industries

- Content Management Systems (CMS): CMS platforms can utilize caching to store frequently accessed page content, reducing database load and improving website performance for visitors.

- News Aggregators: News aggregators can leverage caching to store retrieved news articles for a predefined period, minimizing API calls to external news sources for frequently accessed content.

Latest Tools and Technologies for API Caching

The API landscape offers various tools and technologies to support your API caching strategy:

Caching Servers:

- Memcached: A high-performance in-memory caching server used for frequently accessed data with short expiry times

- Redis: A popular in-memory data store with advanced caching functionalities including data structures well-suited for caching and persistence options for more durable data storage.

- API Gateway Caching: API gateways like Syncloop, Apigee, Kong, and AWS API Gateway can be configured to implement caching functionalities at the gateway level, offloading caching logic from backend servers.

- Caching Libraries: Programming languages often provide caching libraries that simplify cache interaction and management within your API code. For example, Python offers libraries like django-cachetools and Werkzeug for implementing caching functionalities.

Disadvantages and Considerations

While API caching offers significant benefits, there are also some key considerations:

- Cache Invalidation Complexity: Ensuring cached data remains consistent with the backend data source can be challenging, especially for frequently updated data.

- Cache Size and Management: Large cache sizes can consume memory resources and require careful management to avoid performance bottlenecks.

- Not Suitable for All Data: Caching is not ideal for frequently changing data or data requiring strong consistency guarantees.

Conclusion

API caching is a powerful technique to optimize API performance and enhance user experience. By understanding different caching strategies and implementing them appropriately within your API architecture, you can significantly reduce server load, improve response times, and ensure your APIs scale effectively to meet growing demands. Syncloop, along with your chosen caching technologies and development methodologies, can be a valuable asset in your journey towards building high-performance and scalable APIs. Remember, a well-defined API caching strategy is crucial for delivering fast and responsive APIs that empower your applications and delight your users.

Back to Blogs