Taming the Beast: Implementing API Rate Limiting and Throttling

Posted by: Deepak | May 23, 2024

Categories: API rate limiting, API throttling, Backend stability

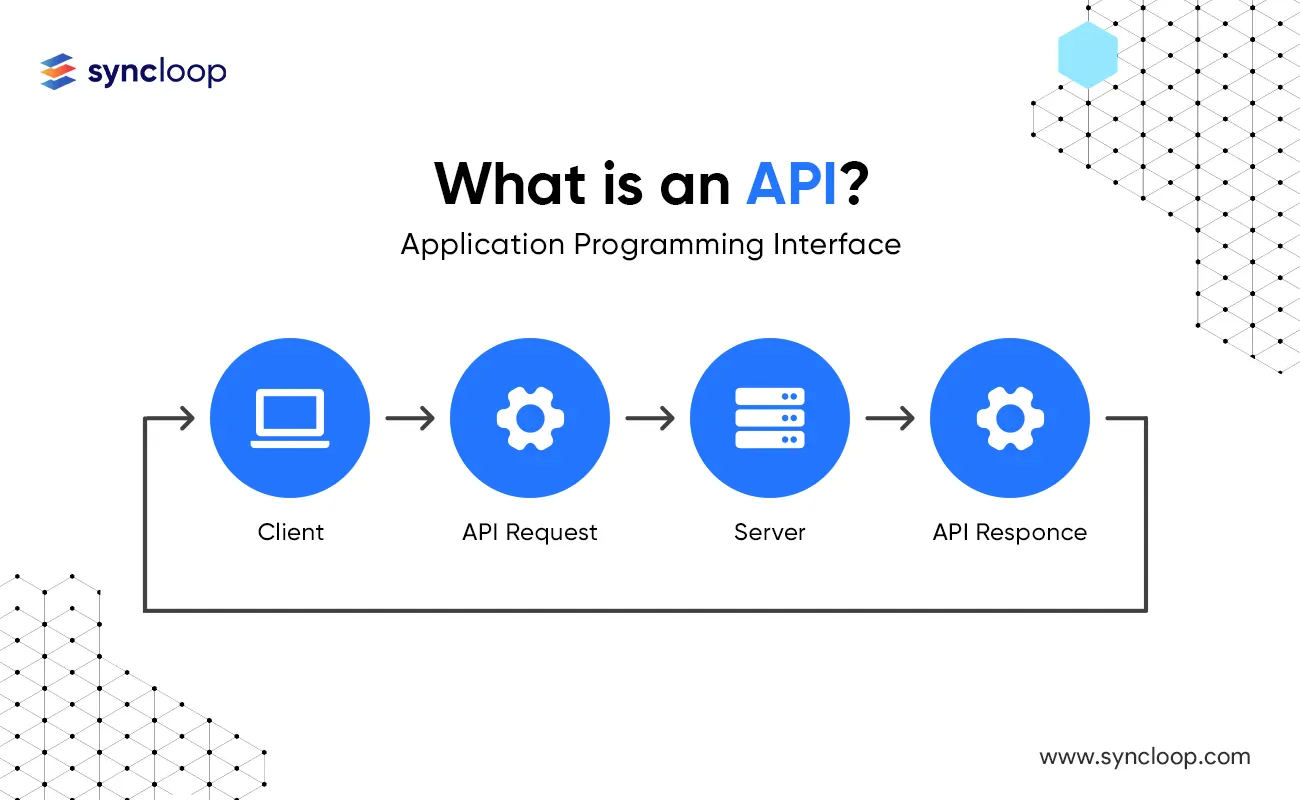

In today's API-driven world, ensuring the smooth operation and stability of your backend services is paramount. A critical aspect of achieving this is managing API traffic effectively. Uncontrolled request surges can overwhelm your servers, leading to performance degradation, outages, and a frustrating user experience.

This is where API rate limiting and throttling come into play. These techniques act as guardians at the API gateway, regulating the flow of incoming requests and protecting your infrastructure from overload.

What's the Difference?

While often used interchangeably, there's a subtle distinction between rate limiting and throttling:

Rate Limiting: Defines a hard limit on the number of requests an API can receive within a specific time window (e.g., requests per second, minute, or hour). Exceeding this limit results in a temporary block for the client.

Throttling: Offers a more dynamic approach. It allows some requests to pass even after the limit is reached, but at a slower pace. This could involve introducing delays or reducing the quality of service (QoS) for subsequent requests.

Why Implement Rate Limiting and Throttling?

The benefits of implementing these techniques are numerous:

- Prevents Denial-of-Service (DoS) attacks: Malicious actors often employ DoS attacks to flood your API with requests, rendering it inaccessible to legitimate users. Rate limiting and throttling can thwart such attempts by capping the number of requests from a single source.

- Improves API performance: By regulating traffic flow, you ensure your servers aren't overwhelmed by sudden spikes in requests. This leads to faster response times and a more consistent user experience.

- Fairness and Resource Management: Prevents a single user or application from monopolizing resources, ensuring fair access for all API consumers.

- Cost Optimization: By preventing unnecessary traffic, you can optimize your cloud resource utilization and potentially reduce costs.

Statistics that Reinforce the Importance:

A 2022 report by Akamai indicates that API abuse attempts have grown by a staggering 181%. This highlights the increasing need for robust API protection mechanisms.

A study by Radware found that 40% of organizations experienced an API-related security incident in the past year. Implementing rate limiting and throttling can significantly reduce this risk.

Examples and Use Cases:

- E-commerce Platforms: Here, rate limiting can be used to prevent bots from scalping limited-edition products by restricting the number of purchase requests per user within a short timeframe.

- Social Media APIs: Throttling can be beneficial to manage excessive content creation or rapid following requests from a single account, potentially indicating automated activity.

- Payment Gateways: Rate limiting can be crucial to safeguard against fraudulent transactions. Limiting login attempts and transaction requests per user can significantly reduce the risk of unauthorized access.

Latest Tools and Technologies:

- API Gateway Solutions: Many cloud providers like AWS API Gateway and Azure API Management offer built-in rate limiting and throttling functionalities.

- Open-Source Libraries: Popular libraries like Netflix's Hystrix and Apache Camel provide granular control over rate limiting and throttling implementations.

- Custom-built Solutions: For specific needs and complex scenarios, developers can create custom rate limiting and throttling mechanisms using programming languages like Python or Java.

Integration Process:

The integration process varies depending on the chosen technology. Here's a general outline:

- Define Rate Limits and Throttling Policies: Determine appropriate limits based on your API's capacity and expected usage patterns. Consider factors like request types, user roles, and time windows.

- Choose the Implementation Method: Select the most suitable approach based on your technical stack and desired level of control. Cloud API gateways offer a user-friendly configuration interface, while libraries or custom solutions require coding expertise.

- Configuration and Deployment: Configure the chosen method with your defined policies. Thoroughly test the implementation to ensure it functions as expected before deploying to production.

Benefits and Considerations:

Benefits:

- Improved Scalability: By managing traffic, your APIs can handle increased loads without compromising performance.

- Enhanced Security: Mitigates the risk of API abuse and DoS attacks.

- Granular Control: Provides the ability to tailor policies to specific API endpoints or user groups.

Considerations:

- Setting Appropriate Limits: Setting limits too low can frustrate legitimate users, while too high might leave your API vulnerable.

- False Positives: Fine-tuning is crucial to avoid accidentally blocking legitimate traffic.

- Distributed Denial-of-Service (DDoS) Attacks: While rate limiting and throttling can help, they might not be sufficient against sophisticated DDoS attacks that originate from a vast network of compromised machines. Consider implementing additional security measures like IP reputation checks and CAPTCHAs to mitigate such threats.

- Monitoring and Analytics: Continuously monitor API traffic patterns and analyze the effectiveness of your rate limiting and throttling strategies. This helps you identify and adjust policies as needed.

- API Documentation: Clearly document your rate limiting and throttling policies within your API documentation. This informs developers about usage expectations and helps them design their applications accordingly.

Advanced Techniques:

Leaky Bucket Algorithm: This algorithm visualizes rate limiting as a bucket with a fixed capacity and a leak at the bottom. Requests arrive at a certain rate, and if the bucket overflows, subsequent requests are rejected. The leak rate determines the maximum allowed request throughput.

Token Bucket Algorithm: This approach provides more burstiness compared to the Leaky Bucket. It assigns tokens to users at a fixed rate. Each request consumes a token, and users can queue up for tokens if they run out. This allows for short bursts of requests exceeding the average rate.

Choosing the Right Approach:

The optimal approach depends on your specific requirements and API usage patterns. Here's a simplified guideline:

For simple scenarios with predictable traffic: Cloud API gateway solutions or open-source libraries with pre-built functionalities might suffice.

For complex scenarios with dynamic traffic patterns or the need for fine-grained control: Consider custom-built solutions using programming languages like Python or Java. This offers maximum flexibility but requires development expertise.

Conclusion

Implementing API rate limiting and throttling is a crucial strategy for ensuring the stability, performance, and security of your APIs. By understanding the concepts, choosing the right tools, and carefully configuring your policies, you can effectively manage API traffic and safeguard your backend infrastructure. Remember, it's an ongoing process that requires continuous monitoring and adjustments to adapt to evolving usage patterns and potential threats.

Back to Blogs